Case for Testing: David Schneider, Principal Technologist, Ixia

Network testing is a topic seldom discussed as compared to other technologies and markets. However, insufficient testing can sometimes result in a number of issues.

Insufficient testing leaves room for unknown risks. Here are a few examples:

Telecom XT’s mobile network faced four service outages between December 25, 2009 and February 25, 2010. Alcatel- Lucent, which had won the contract to install the XT network, could not exactly pinpoint the cause of the outages. Telecom XT and its partners are still trying to convince their wireless customers to stay with them. However, this could have been avoided with proper pre-deployment testing.

In March 2010, McAfee’s enterprise customers had a major outage involving millions of machines. McAfee did not test its virus update on the latest version of Windows XP, which caused computers of corporate customers to continuously reboot. Had there been proper testing, this costly outage could have been avoided.

AT&T, in September 2009, was forced to stop offering its iPhone data service in New York City. The iPhone’s constant network pinging, which causes large amounts of traffic, overwhelmed AT&T’s network. Real-world testing could have prevented this.

Network equipment manufacturers (NEMs), Ixia’s largest customers, perform testing on their routers, switches, application delivery controllers and other devices. They acknowledge that testing is essential at all points in their development cycle: from design to acceptance, integration and release.

While developing, testing and manufacturing their network components, they deploy different types of testing:

• Functional testing to ensure that their products perform the basic functions efficiently. This is especially important during the development process.

• Conformance testing to ensure their products perform the functions detailed in industry specifications. These tests are designed to suit each feature and option appearing in the specification.

• Performance testing to determine capacities – the number of connections, the speed at which new connections are made, the maximum number of sessions, throughput, latency and other pieces of data. These items are often used to populate data sheets and for keeping abreast of competitive information.

• Security testing to ensure that device functionality and performance are not vulnerable to various known attacks.

People who use these network products within a service provider’s network, enterprise data centre, government agency or private network, frequently skip testing. This may be owing to several factors:

• They do not want to repeat the process, assuming that the NEM had already tested their devices.

• There is faith that the integrator would test the products before putting their network together.

• They often bank on others having the same network to carry out the testing.

• Testing is too complex, involving dozens of routing and application protocols.

• Network testing is expensive – both for the equipment and its operation.

Even if some of these are legitimate views, it is still important that network testing be carried out for devices, subsystems and complete services, including that for interoperability, real-world conditions, security and network updates.

Interoperability testing is necessary as networks contain devices from multiple vendors that perform similar or related functions. Even if devices are acquired from the same vendor, there are chances of them having ben purchased several years before. This is especially true during the early years of protocol development, where the devices from different manufacturers only support the earlier protocol versions.

This can result in some problems. Devices can possess incompatible feature sets, making it difficult to take advantage of their optimum capability, and can have different performance characteristics. For example, one broadband aggregation device might handle 1,000 users, but the device that it feeds may be able to handle only 800 users.

The vendor and integrator performance specifications are sometimes made considering ideal conditions, but they might not pertain to ground realities. For example, an application delivery controller is designed to balance the priority of different voice, video and data flows. The vendor provides statistics like the maximum number of HTTP connections, video streams and others, but it is almost impossible for them to provide performance measurements for all percentage mixes of traffic.

To determine what a network can achieve, different users need to test it with their individual conditions. This includes the number of connections, including the type of traffic and its percentage, and the maximum connection rate.

For example, a multinational organisation changed its frame relay connections to use the internet, with VPN gateways that use internet protocol security or IPsec. Using the throughput on the VPN gateway’s spec sheet, they decided on their bandwidth requirement. However, when the system was deployed, they found that their VOIP-services were operating poorly, much before they ran out of bandwidth.

This, because it turned out that the VOIP packets were small and the VPN gateways could only handle one-tenth of the traffic when used with small packets.

There are many components involved in a security implementation: firewalls, intrusion prevention systems, antivirus software, anti-spam techniques and unified threat management solutions. When security is offered as a service, many more components are involved.

Each component provides a different type of security and can perform it to a different degree and extent. But each additional protection mechanism used can reduce the amount of critical business traffic. Security testing with actual configurations is essential to ensure network security.

Similarly, with all other network devices, protection is traded for good throughput. Because of this, each network’s security implementation is different, requiring individualised testing. For example, Telefónica suffered a multi-day outage in 2009 due to a distributed denial of service attack, which took advantage of configuration errors in the company’s central network and customer premises equipment. This outage could have been prevented by testing and determining the vulnerabilities. With the know-how of the known vulnerabilities, testing of this type involves penetration, malware and other techniques.

Almost every network changes over time – with new users, additional usage of existing services, addition of new services and a sea of device firmware and software updates. There is no end to testing techniques and it is necessary to go on applying them.

Service providers and larger enterprises utilise pre-deployment labs, where they test scaled-down versions of their networks. Smaller organisations must wait until they are able to disable access to their network for performing a certain amount of testing. Depending on the services provided, it may be possible to isolate just a part of the network for testing. In cases where there are no pre-deployment labs and the network cannot be open for testing, service monitoring is the only alternative. This type of testing involves watching network traffic and/or injecting test sessions to measure its performance.

In recent years, IT organisations have moved their platforms and applications to the cloud. Enterprises generally use the vast facilities of commercial cloud service providers. However, at times, they maintain a centralised cloud computing site.

Although the infrastructure has moved off-site, there is no less need for pre-deployment testing. In fact, the cloud enables such testing without any additional equipment or scheduled downtime through the simple expedient of building a temporary, sample test environment. Such test set-ups are generally one-tenth the size of the normal network and still provide quality measurements.

Virtualisation is the key enabling technology for cloud computing, utilising many virtual machines on powerful host computers. Many virtualised hosts are assembled together to establish large data centres, along with standard networking components – routers, switches, server load balancers, networking appliances and storage area networks.

However, with centralisation, IT organisations lose control over the communications network. They do not have the ability to tune hosts, networks and storage; the cloud service provider takes care of these.

This, of course, means that network and application testing is more necessary than ever. The capacity, latency, throughput and end-user quality of experience must be measured in realistic scenarios, especially in cases where the shared hosts are used by other applications. Most often, quality guarantees are not offered by service providers and must be regularly tested by the application owner.

Security testing, too, is a challenge in cloud computing. Large numbers of clients share cloud computing facilities and must be isolated from each other. Further, cloud data centres represent a “big piggy bank”, where a large number of hacker targets are co-located. This may mean that cloud-hosted applications have to be made more secure than when run in private data centres.

Another compelling reason for using cloud services is the ability to respond quickly to increased demand – often referred to as cloud elasticity. Virtualisation tools allow new virtual machines to be instantiated quickly and/or moved to other hosts. These creations and movement operations introduce latency; this must be measured and judged as acceptable.

Network and performance testing are often ignored or are insufficiently supported activities. Skipping or minimising pre-deployment testing may appear to save money in the short run, but it can be far more expensive in the long run.

Network and application performance can lead to expensive downtime – sometimes measured in millions of dollars per hour. Programming and configuration errors can open up applications to security breaches, which can be very expensive. They can result in loss of data, outright theft of information, legal exposure and customer churn.

In June this year, for example, Sony suffered a break-in at its gaming website. It experienced $171 million in direct costs and expects that the total will likely be more than $1 billion in remedies, brand damage and lost business. Two attacks succeeded, one involving a legal purchase that then took advantage of a known, unpatched vulnerability in an internal application server; the other used a well-known technique, known as SQL injection, against the Sony Music Japan site.

- Most Viewed

- Most Rated

- Most Shared

- Related Articles

- Rural Telecom in India: Abhishek Chauhan...

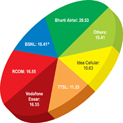

- Gujarat telecom market

- Future of mobile applications in India: ...

- Shared infrastructure in India: Jaideep ...

- Broadband in India: Santosh Anchan, Port...

- Passive Telecom Infrastrucure In India: ...

- Infrastructure sharing in India: Arun Ka...

- 3G users in India will touch 400 million...

- Streamlining Communication Product Lifec...

- T&M in India: Rajesh Toshniwal, Founder ...